Deep learning is going to change the machine vision landscape in a huge way. New applications have been enabled by deep learning, and it disrupts established markets. The product manager at FLIR visits companies over a wide range of industries, and every company they visited this year is working on deep learning.

It has never been more simple to get started, but the difficulty is knowing where to begin. This article will outline a simple-to-follow framework for building a deep learning inference system for under $600.

What is Deep Learning Inference?

Inference is the utilization of a deep-learning-trained neural network to make predictions on new data. Inference is a lot better at answering subjective and complex questions than traditional rules-based image analysis.

Inference can be run on the edge near the data source by optimizing networks to run on low-power hardware. This eliminates the system’s dependence on a central server for image analysis, resulting in higher reliability, lower latency, and better security.

1. Choosing the Hardware

The aim of this guide is to create a high-quality, reliable system to deploy in the field. Combining deep learning inference with traditional computer vision methods can deliver computational efficiency and high accuracy and by leveraging the strengths of each method.

For this technique, the Aaeon UP Squared-Celeron-4GB-32GB single-board computer has the memory and CPU power required. Its X64 Intel CPU runs the same software as traditional desktop PCs, simplifying development compared to ARM-based, single-board computers (SBCs).

The code which allows deep learning inference utilizes branching logic; the execution of this code can be greatly accelerated by using dedicated hardware. The Intel® Movidius™ Myriad™ 2 Vision Processing Unit (VPU) is an extremely powerful and efficient inference accelerator, which has been integrated into the new inference camera from FLIR, the Firefly DL.

Source: FLIR Systems

| Part |

Part Number |

Price [USD] |

USB3 Vision Deep Learning

Enabled Camera |

FFY-U3-16S2M-DL |

299 |

| Single Board Computer |

UP Squared-Celeron-4GB-32GB-PACK |

239 |

| 3m USB 3 cablel |

ACC-01-2300 |

10 |

| Lens |

ACC-01-4000 |

10 |

| Software |

Ubuntu 16.04/18.04, TensorFlow,

Intel NCSDK, FLIR Spinnaker SDK |

0 |

| |

Total $558 |

|

2. Software Requirements

There are a number of free tools available for training, building, and deploying deep learning inference models. This project utilizes a range of free and open-source software. Each software package has installation instructions available on its respective websites. This guide assumes that the user is familiar with the basics of the Linux console.

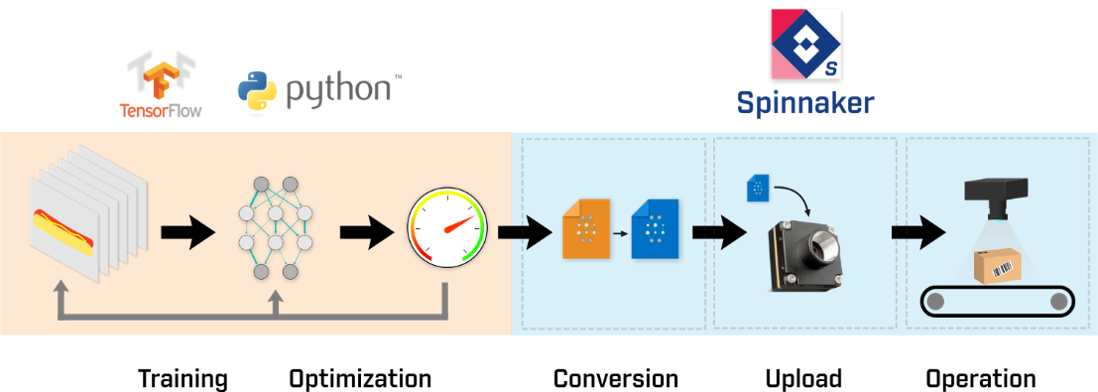

Collect Training

Data |

Train Network

(augmentation optional) |

Evaluate

performance |

Convert to Movidius

graph format |

Deploy to

Firefly DL camera |

Run inference on

captured images |

Figure 1. Deep learning inference workflow and the associated tools for each step. Image Credit: FLIR Systems

3. Detailed Guide

Getting Started with Firefly Deep Learning on Linux supplies an introduction on how to retrain a neural network and convert the resulting file into a firefly compatible format, and show the results using SpinView. Users are given a step-by-step process on how to train and convert inference networks themselves via terminal.

This information has been sourced, reviewed and adapted from materials provided by FLIR Systems.

For more information on this source, please visit FLIR Systems.