Dec 7 2015

A dolphin’s echolocation beam was directed at a submerged man and the echo captured by a hydrophone system. The echo signal was sent to a sound imaging laboratory who created the first ever ‘what-the-dolphin-saw’ image of the submerged man, by using a cymatic-holographic imaging technique.

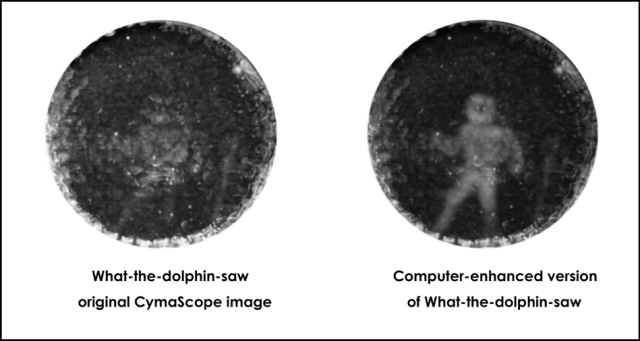

What-the-dolphin-saw original and enhanced images

What-the-dolphin-saw original and enhanced images

Researchers in the USA and UK and have made a significant breakthrough in imaging a submerged man from the echolocation beam transmitted by a dolphin. The resulting image is faint but following enhancement techniques key features of the man and background are revealed. Team leader, Jack Kassewitz of SpeakDolphin.com, is delighted with the result, “This is the first time we have captured a what-the-dolphin-saw image of a submerged man. We employed a similar technique in 2012 to capture a dolphin’s echolocation picture of a flowerpot and several other submerged plastic objects but the present research has confirmed that result and so much more.”

The research took place at the Dolphin Discovery Centre in Puerto Aventuras, Mexico. The submerged man, Jim McDonough, wore a weight belt and exhaled most of the air in his lungs to overcome his natural buoyancy, then arranged himself against a shelf in the research pool. It was decided not to use a breathing apparatus, to ensure that there would be no bubbles to adversely affect the results of the experiment, therefore, the whole event had to be accomplished within a single breath.

With Jim in position the female research dolphin, Amaya, was tasked to echolocate upon the man, to ‘see’ Jim with its sound-vision sense. Most of the resulting echo from his body was reflected back to Amaya but one of water’s sonic properties is similar to that of air, causing sounds to diffract in many directions simultaneously. One of the theoretical implications of this property of water is that when a dolphin sees an object with its sound-vision sense it is possible that all other dolphins in the near vicinity will also receive the image. This effect could have profound benefits for a pod of dolphins. In experimental set ups it has been found that the hydrophone (high frequency microphone) can be positioned almost anywhere in the vicinity of the target object or dolphin. A further finding of the experimental set up is that is not necessary to collect the whole of the dolphin’s reflected echo beam. All parts of the echo contain quasi-holographic sonic data that represents the object and from which an image of the object can be created. Light provides a good analogy to this basic principle: when light reflects from an object it contains data that represents the object; any small part of the reflected light contains an image of the object as viewed from a particular angle.

The dolphin’s echo signal was recorded using high specification audio equipment, by Alex Green and Toni Saul, and sent via email to the CymaScope laboratory in the UK. Acoustic physics researcher, John Stuart Reid, heads the CymaScope team, “When I received the recording Jack had told me only that it might contain an echolocation reflection from someone’s face. I noticed the file name “Jim” so I assumed that the image, if it existed within the file, would be that of a man’s face. I was somewhat dubious whether this could be achieved because the imaging we had carried out in 2012 was of simple plastic objects that had no inherent detail, whereas a face is a highly detailed form. I listened to the file and heard an interesting structure of clicks. The basic principle of the CymaScope instrument is that it transcribes sonic periodicities to water wavelet periodicties, in other words, the sound sample is imprinted onto a water membrane. The ability of the CymaScope to capture what-the-dolphin-saw images relates to the quasi-holographic properties of sound and its relationship with water, which will be described in a forthcoming science paper on this subject. When I injected the click train into the CymaScope, while running the camera in video mode, I saw a fleeting shape on the water’s surface that did not resemble a face. I replayed the video, frame by frame and saw something entirely unexpected, the faint outline of a man. At this point I sent the image to Jack, with a note that simply read, ‘this frame has what appears to be the fuzzy silhouette of almost a full man. No face.“

When Jack Kassewitz received the still image it turned out that he was just as surprised as Reid because he had been unaware that Amaya had been echolocating on Jim as she approached him from several feet away. “I was astonished when I received the faint image of Jim as I had no idea that Amaya had been echolocating during its in-run. I called John in the UK and we discussed the image in detail. Later, he sent me a computer enhanced version that revealed several details not easily seen in the raw image, such as the weight belt worn by Jim. Having demonstrated that the CymaScope can capture what-the-dolphin-saw images our research infers that dolphins can at least see the full silhouette of an object with their echolocation sound sense, but the fact that we can just make out the weight belt worn by Jim in our what-the-dolphin-saw image suggests that dolphins can see surface features too. The dolphin has had around fifty million years to evolve its echolocation sense whereas marine biologists have studied the physiology of cetaceans for only around five decades and I have worked with John Stuart Reid for barely five years. Even so, our recent success has left us all speechless. We now think it is safe to speculate that dolphins may employ a “sono-pictorial” form of language, a language of pictures that they share with each other. If that proves to be true an exciting future lies ahead for inter species communications.”