Jun 9 2016

OMRON Corporation announced on June 6 the development of the world's first onboard sensor featuring "driver concentration-sensing technology," which combines OMRON's proprietary image-sensing technology with state-of-the-art AI (time-series deep learning (*)) to sense the driver's various motions and conditions, and thereby determine if he/she is in a condition suitable for safe driving. With a view toward realizing collision-free automated driving and other advanced driver assistance systems, OMRON has dedicated itself to making our motorized society safer and more secure by innovating relationships between people and machines.

Overview of "Driver Concentration Sensing Technology"

Overview of "Driver Concentration Sensing Technology"

In recent years, we have seen multiple cases of accidents where the driver's condition changed suddenly and thus made it difficult to continue driving, as well as a growing demand for the development of technologies that can assist safe driving and, eventually, automated driving. Against this background, OMRON's development team has been working on safe vehicle control technology that determines whether the driver is in a condition suitable for safe driving.

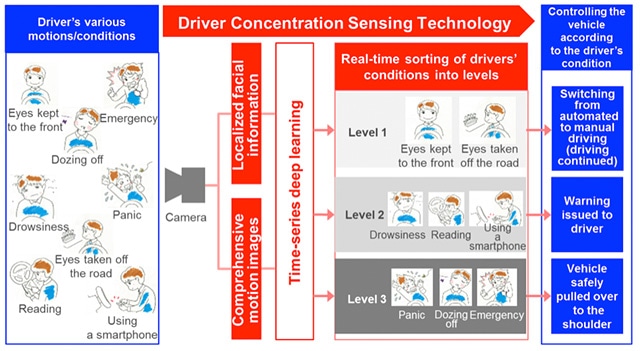

OMRON's "driver concentration-sensing technology" uses an onboard camera for real-time detection of whether the driver is in a suitable condition for driving. A combination of OMRON's proprietary high-precision image-sensing technology and state-of-the-art "time-series deep learning" AI technology, this innovative system makes it possible to control a vehicle based on the driver's condition by, for example, safely switching between automated and manual driving and safely bringing the vehicle to a stop if something happens to the driver, thereby effectively assisting safe driving and enhancing the safety of our motorized society. OMRON aims to have an onboard sensor featuring this technology adopted for such applications as automated driving vehicle models to be released from 2019 to 2020.

About the technology

1. Real-time sensing of the driver's various motions/conditions to determine if he/she is in a condition suitable for driving

- During automated driving on expressways: The driver's various motions/conditions are categorized in real time as "driving handover levels" based on "how much time would be needed to resume driving" (See Fig. 2)

- During manual driving: The driver's various motions/conditions are categorized in real time as "hazard levels" based on "how safely the vehicle can be driven" (See Fig. 3)

- The numbers and criteria of levels can be adjusted based on the vehicle manufacturer's preferences, making the technology applicable to a broad range of vehicle models.

2. Detection of the driver's various conditions by a single palm-sized camera

- In order to monitor the driver's diverse conditions, it used to be necessary to synthesize information gained from multiple cameras and sensors, including a camera to detect which direction the driver is facing, a sensor to detect his/her heartbeat and other biological information, and a sensor to detect the movement of the steering wheel. By processing two kinds of images - "localized facial images" and "comprehensive motion images" - this technology uses a single compact, palm-sized camera to detect the driver's various conditions, such as dozing off, taking his/her eyes off the road, using a smartphone, or reading.

3. Real-time data processing through an in-vehicle closed system without a network connection

- Because conventional time-series deep learning handles continuous data, access to a relatively large server system is required. OMRON's technology, however, separates visual data from the cameras into "high-resolution localized facial images" and "low-resolution comprehensive motion images," and efficiently combines them to reduce the volume of images processed, thus achieving "time-series deep learning" in real time with an onboard closed system. Since it is no longer necessary to connect to a large-scale server system and equip vehicles with network connectivity, this technology can be added to existing vehicles or introduced to vehicles in a lower price range.

(Fig. 2: "Driving Handover Levels" Determined by "Driver Concentration-Sensing Technology":

http://prw.kyodonews.jp/prwfile/release/M102197/201606071381/_prw_OI2fl_Qroib2Nw.jpg)

(Fig. 3: "Hazard Levels" Determined by "Driver Concentration-Sensing Technology":

http://prw.kyodonews.jp/prwfile/release/M102197/201606071381/_prw_OI3fl_tgB7Zzub.jpg)

Demonstrations

OMRON will conduct technological demonstrations of the "driver concentration-sensing technology" at the 22nd Symposium on Sensing via Image Information (SSII2016) (Wednesday, June 8 - Friday, June 10, 2016, Pacifico Yokohama) and the CVPR Industry Expo 2016 (Monday, June 27 - Thursday, June 30, 2016, Las Vegas, the U.S.A.).

(*) Time-series deep learning

Time-series deep learning is a neural network technology that recognizes not only static images, but also time-series video data by modifying the network structure. Conventional deep learning technology, which is increasingly used in the field of AI, can recognize single-frame images with extremely high accuracy (determines whether an object in a static image is a cat, dog, etc.), but may not be very accurate in recognizing time-series events, with the result that its applications have been limited to voice recognition and other areas. Human movements are one area in which highly accurate recognition was not possible as such motions entail a very high range of dimensional data. As a solution to this technological challenge, OMRON improved the Recurrent Neural Network (RNN) technology, which retains past data in its internal memory, to synthetically process highly accurate facial and video data, thus realizing high-accuracy recognition of the diverse conditions of drivers that involve time-series data.