Dec 19 2016

If you want to capture a super-slo-mo film of the nanosecond dynamics of a bullet impact, or see a football replay in fanatical detail and rich color, researchers are working on an image sensor for you. Last week at the IEEE International Electron Devices Meeting in San Francisco, two groups reported CMOS image sensors that rely on new ways of integrating pixels and memory cells to improve speed and image quality.

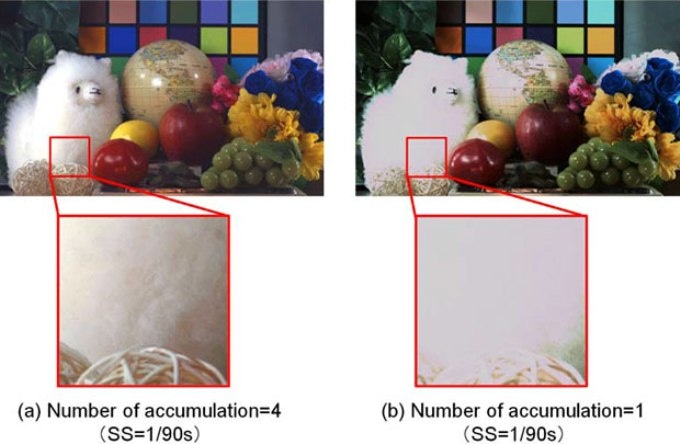

Canon improved dynamic range by storing charge (called accumulation) four times from each frame [left] instead of once [right]. The result was a higher dynamic range. Photo: Canon

Canon improved dynamic range by storing charge (called accumulation) four times from each frame [left] instead of once [right]. The result was a higher dynamic range. Photo: Canon

Both groups are working on improving global-shutter image sensors. CMOS image sensors usually use what’s called a rolling shutter. Rolling shutter cameras scan across a scene—that is, each frame of the image only shows part of the scene. This makes them speedier but it can cause distortion, especially when filming a fast-moving target like a car or a bullet. Global shutters are better for filming speeding objects because they can snap the entire scene at once. CMOS sensors aren’t naturally suited to this, because the pixels are usually read out row by row. CCD image sensors, on the other hand, have a global shutter by definition, because all the pixels are read out at once, says Rihito Kuroda, an engineer at Tohoku University in Sendai, Japan. But they’re not ideal for high speed imaging, either. Due to their high voltage operation, CCDs heat up and use a lot of power when operating at high shutter speeds.

To get beyond the row-by-row, rolling shutter operation of CMOS, chip designers assign each pixel its own memory cell or cells. That provides a global shutter but with sacrifices. In the case of ultrahigh speed imaging, the sensors are constrained by their memory capacity, says Kuroda. By focusing on the design of a custom memory bank, Kuroda’s group has developed a CMOS image sensor that can take one million frames per second for a relatively long recording time—480 microseconds at full resolution—compared to previous ultrahigh speed image sensors.

Because storage is limited, it’s not possible to take a long, high speed, high resolution video—something must be sacrificed. Either the video has to be short, capturing only part of a high speed phenomenon in great detail, or it must have lower spatial or temporal resolution. So Kuroda’s group focused on boosting storage in the hope of improving all three constraints.

Kuroda’s group made a partial test chip with 96 x 128-pixels. The image sensor is designed to be tiled to have a million or more pixels. Each pixel in the prototype has 480 memory cells dedicated to it. So the camera can take high resolution images for 480 frames. Other sensors have captured video at a higher frames per second rate but they’ve had to do it either for a shorter period of time or with poorer spatial resolution.

The Tohoku group designed a dense analog memory bank based on vertical capacitors built inside deep trenches in the silicon chip. Because the capacitors hold a variable amount of charge, rather than a simple 0 or 1 as in DRAM, lowering the amount of current that leaks out is critical, says Kuroda. The deeper the trenches, they found, the greater the volume of each capacitor and the lower the leakage current. Increasing volume with trenches rather than by spreading out over the chip saved space and allowed for greater density of memory cells. This meant more memory cells per pixel, which allowed for longer recordings. It also freed up space to put more pixels on the chip, improving the camera’s resolution.

Some of Kuroda’s earlier CMOS image sensor chips, which used planar rather than trenched capacitors, are already on the market in ultrahigh speed cameras (HPV X and X2 models) made by Shimadzu. He says the new million frame per second sensor will further improve products like them. To push things even further, Kuroda says the next step is to stack the pixel layer on top of the memory layer. This will bring each pixel closer to its memory cells, shortening the time it takes to record each frame and potentially speeding up the sensor even more.

This sort of camera is useful for engineers who need to follow the fine details of how materials fail—for example how a carbon fiber splits—in order to make them more resilient. Physicists can use them too, for example, to study the dynamics of plasma formation.

Separately, researchers from Canon’s device technology development headquarters in Kanagawa, Japan, reported memory-related improvements for high-definition image sensors that could be used to cover sporting events or in surveillance drones. While the Tohoku group is working on ultrahigh speed, the Canon group aims to improve the image quality of high-definition global shutter cameras operating at much lower frame rates of about 30 to 120 per second.

Like the Tohoku University chip, the Canon sensor closely integrates analog memory with sensors. In the Canon chip, each pixel in the 4046 by 2496 array has its own built in charge-based memory cell. They’ve used an engineering trick to improve the image quality by effectively increasing the exposure time within each frame. Typically, the image sensor dumps its bucket of electrons into the memory cell once per frame. This transfer is called an accumulation. The Canon pixels can do as many as four accumulations per frame, emptying their charges into the associated memory cell four times. This improves the saturation and dynamic range of the images relative to previous global shutter CMOS devices operating around the same frame rates. At 30 frames per second, the sensor maintains a dynamic range of 92 dB.

This story was corrected on 19 December 2016. It is not certain Shimadzu will incorporate the current research into a product.