May 30 2019

MIT researchers have successfully compiled a huge dataset by wearing a sensor-packed glove while handling a wide range of objects.

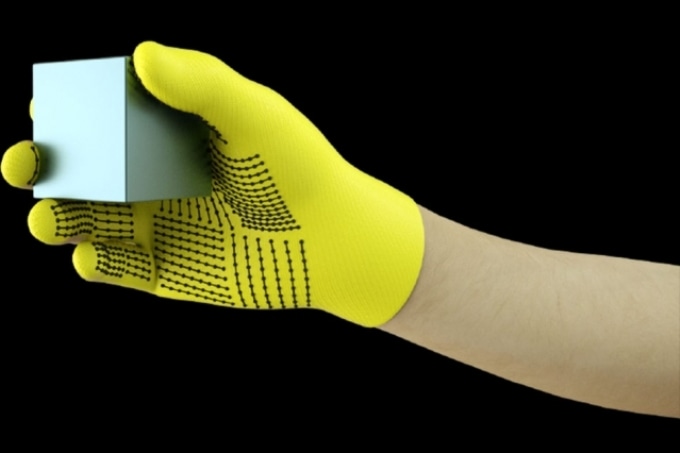

MIT researchers have developed a low-cost, sensor-packed glove that captures pressure signals as humans interact with objects. The glove can be used to create high-resolution tactile datasets that robots can leverage to better identify, weigh, and manipulate objects. (Image credit: MIT)

MIT researchers have developed a low-cost, sensor-packed glove that captures pressure signals as humans interact with objects. The glove can be used to create high-resolution tactile datasets that robots can leverage to better identify, weigh, and manipulate objects. (Image credit: MIT)

This dataset allows an Artificial Intelligence (AI) system to detect objects through touch alone. The data can possibly be exploited to help robots in detecting and manipulating objects, and it could also help in prosthetics design.

A cost-effective knitted glove, known as “scalable tactile glove” (STAG), has been developed by the researchers. This glove is integrated with approximately 550 small sensors across almost the whole hand. Pressure signals are captured by each sensor as humans interact with various objects in many different ways. These signals are processed by a neural network to understand a dataset of pressure-signal patterns associated with certain objects. The system subsequently utilizes that dataset to categorize the objects and estimate their weights by feel alone, without any need for visual input.

In a paper recently reported in Nature, the scientists have demonstrated a dataset which they had compiled using the STAG glove for 26 standard objects, including a mug, pen, spoon, tennis ball, scissors, and a soda can. With the help of the dataset, the system predicted the identities of the objects with a precision of around 76%. In addition, the system can predict the exact weights of a majority of objects within roughly 60 g.

Today, similar sensor-based gloves are utilized that are exorbitantly priced and usually contain just about 50 tiny sensors that capture only a minimum amount of data. Although very high-resolution data is generated by STAG, it is made from materials that are commercially available in the market, totaling roughly $10.

It is possible to combine the tactile sensing system with image-based datasets and conventional computer vision to give robots a more human-like interpretation of interacting with objects.

Humans can identify and handle objects well because we have tactile feedback. As we touch objects, we feel around and realize what they are. Robots don’t have that rich feedback. We’ve always wanted robots to do what humans can do, like doing the dishes or other chores. If you want robots to do these things, they must be able to manipulate objects really well.

Subramanian Sundaram, PhD ’18, Former Graduate Student, Computer Science and Artificial Intelligence Laboratory, MIT

The dataset was also used by the team to determine the cooperation between areas of the hand during interactions with objects. For instance, when individuals utilize the middle joint of their index finger, they will seldom use their thumb; however, the tips of the middle and index fingers invariably relate to the usage of thumb.

“We quantifiably show, for the first time, that, if I’m using one part of my hand, how likely I am to use another part of my hand,” he stated.

Information can possibly be used by prosthetics manufacturers to, for example, select optimal areas to place pressure sensors and help modify prosthetics to the objects and tasks that are regularly interacted by the people.

Sundaram’s paper also includes CSAIL postdocs Jun-Yan Zhu and Petr Kellnhofer; Antonio Torralba, a professor in EECS and director of the MIT-IBM Watson AI Lab; CSAIL graduate student Yunzhu Li; and also Wojciech Matusik, an associate professor in electrical engineering and computer science and head of the Computational Fabrication group.

An electrically conductive polymer that varies resistance to applied pressure is used to laminate STAG. Conductive threads were also sewn through the holes in the conductive polymer film—right from the tips of the fingers to the base of the palm.

These conductive threads overlap in a way that changes them into pressure sensors. If a person wearing the glove lifts, holds, feels, and drops a certain object, the pressure will be recorded by the sensors at each point.

The conductive threads join from the glove to an exterior circuit that converts the pressure data into “tactile maps,” which are basically short videos of dots increasing and reducing in size across a hand graphic. The dots denote the position of the pressure points, and their size denotes the force—bigger dot translates to greater pressure.

Using those tactile maps, the team compiled a dataset of approximately 135,000 video frames from interactions with as many as 26 objects. A neural network can use these video frames to estimate the objects’ weight and identity, and offer a better undemanding of the human grasp.

In order to detect objects, the team created a convolutional neural network, or CNN, which is often used for categorizing images, to relate certain pressure patterns with particular objects. However, the trick was selecting frames from varied kinds of grasps to obtain a complete picture of the object.

The aim was to imitate the way humans can grasp an object in several varied ways so as to identify it, without having to use their eyesight. In a similar way, the CNN designed by the researchers selects around eight semi-random frames from the video representing the most different grasps, for example, holding a mug from the handle, top, and bottom.

Conversely, the CNN cannot simply select haphazard frames from the unlimited number present in every video, or it probably will not select clear grips. Rather, it combines analogous frames together, leading to evident clusters that correspond to special grasps. Subsequently, it pulls a single frame from each of those distinct clusters, making sure that it has a representative sample. The CNN subsequently utilizes the contact patterns which it learned during training to estimate the classification of an object from the selected frames.

We want to maximize the variation between the frames to give the best possible input to our network. All frames inside a single cluster should have a similar signature that represent the similar ways of grasping the object. Sampling from multiple clusters simulates a human interactively trying to find different grasps while exploring an object.

Petr Kellnhofer, Postdoc, Computer Science and Artificial Intelligence Laboratory, MIT

To predict the weight, the team constructed an individual dataset of about 11,600 frames from tactile maps of objects that are being picked up by thumb and finger, held, and then dropped. Remarkably, the CNN was not trained on any kind of frames it was tested on; this implies that it could not learn to simply relate weight with an object. During the test, the CNN was fed with one frame. Principally, the CNN detects the pressure around the hand induced by the weight of the object, and overlooks the pressure induced by other factors, for example, hand positioning to avoid the object from slipping. It then computes the weight on the basis of the suitable pressures.

The AI system could be integrated with the sensors, which are already on the robot joints that determine both force and torque to help them better estimate the weight of the object.

Joints are important for predicting weight, but there are also important components of weight from fingertips and the palm that we capture.

Subramanian Sundaram, PhD ’18, Former Graduate Student, Computer Science and Artificial Intelligence Laboratory, MIT