Oct 13 2010

The sensor gloves which were in use currently encountered an issue with the translation of American Sign Language that they could only figure out the letters that are registered by speedy finger signals, while on the contrary, sign language has much complicated gestures for interpreting the meanings and words.

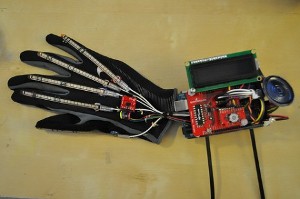

Sensor Gloves with Sensing units and cables

Sensor Gloves with Sensing units and cables

Researchers at the Carnegie Mellon University under the guidance of Kendall Lowrey focused their research to subdue this issue in translation and to link the glove to a voicebox screen for achieving instantaneous verbalization of the gestures that have been translated.

The innovative glove is equipped with numerous flex sensing units for the fingers and an accelerometer is integrated for interpreting the arm signals. In addition, a miniature LCD display, an ultra-small speaker, Arduino Mega-based cables and input loops and numerous maths and perception filters were also embedded in the system.

Presently, the experimental glove is capable of identifying only the alphabets and nearly ten words, but it can be advanced progressively to a great extent to achieve still more added functionalities.