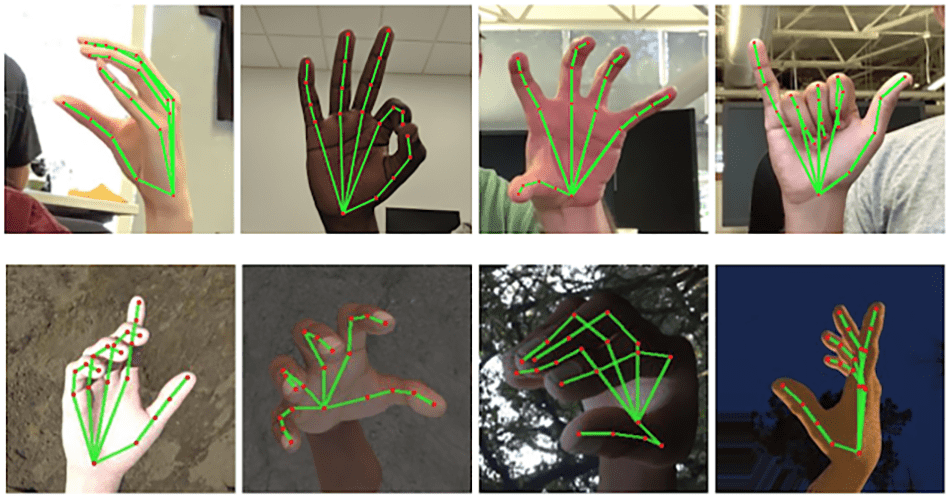

Top: Aligned hand crops passed to the tracking network with ground truth annotation. Bottom: Rendered synthetic hand images with ground truth annotation. Image Source: Google AI

Top: Aligned hand crops passed to the tracking network with ground truth annotation. Bottom: Rendered synthetic hand images with ground truth annotation. Image Source: Google AI

Across the globe millions of people use sign language to communicate every day. Until now developing software or applications that capture the complex hand-gestures to translate into verbal speech has been limited. However, Google’s latest announcement could be the cue that many have been waiting for as they say it is now within the realms of possibility for a smartphone to “read aloud” sign language.

While Google is yet to develop an app of its own the tech giant has published algorithms that will enable developers to make their own apps for smartphones and devices. Previously, software with this capability was only made available for PCs.

Robust real-time hand perception is a decidedly challenging computer vision task, as hands often occlude themselves or each other (e.g. finger/palm occlusions and hand-shakes) and lack high contrast patterns.

Valentin Bazarevsky and Fan Zhang, researchers state on Google’s AI blog

Furthermore, hand gestures can vary from person to person due to the quick and subtle movements each individual may add. This adds to the amount of data produced in the images and the task of the researchers is therefore to reduce the amount of data the algorithms need to disseminate. Bazarevsky and Zhang went on to add, “Whereas current state-of-the-art approaches rely primarily on powerful desktop environments for inference, our method achieves real-time performance on a mobile phone, and even scales to multiple hands.”

With this hand perception functionality, the researchers at Google hope to see a wide range of creative applications and new avenues for research opening up in the near future.

Campaigners have welcomed the recent breakthrough but say an app that produced audio from hand signals alone would miss any important facial expressions or speed of signing - these factors often alter the meaning of what is being said.

Action on Hearing Loss's technology manager, Jesal Vishnuram, says that this is a good first step for hearing people, but that other capabilities should be considered, "From a deaf person's perspective, it'd be more beneficial for software to be developed which could provide automated translations of text or audio into British Sign Language (BSL) to help everyday conversations and reduce isolation in the hearing world."

Previous versions of this software have often been confused when parts of the hand have been obscured by the movement and bending of the fingers and wrist, making it difficult for the video tracking element. Google’s approach is to utilize a 21-point graph across the fingers, palm and back of the hand in order to condition the software and make it simpler to understand and translate a sign or gesture when overlapping touches and bending of the fingers occur.

Additionally, rather than seek out and detect each and every whole hand the researchers had the system locate the palm and from there work out the position of the fingers to analyze separately.

The artificial intelligence learns from a series of real-world images containing various poses and gestures for signs. These some 30,000 images have been manually annotated with the 21 points for the AI to absorb the data from. This can then be applied into a working scenario once the ‘human’ hand has been detected, it is compared to the familiar gestures and poses, from sign language symbols for letters and numbers to things like “yeah,” “rock” and even “Spiderman.”

Google has committed to plans to continually develop this technology by stabilizing the tracking system and growing the amount and types of gestures it can distinguish.

The Google researchers, Bazarevsky and Zhang, said, “We believe that publishing this technology can give an impulse to new creative ideas and applications by the members of the research and developer community at large. We are excited to see what you can build with it!”

Researchers and creative developers can currently access the source-code here to expand on as it is not under license or use in any Google products as of yet.

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.