Oct 30 2019

In spite of the various technological developments, nothing can beat evolution in terms of research and development. For instance, jumping spiders are tiny arachnids that have remarkable depth perception, regardless of their extremely small brains, enabling them to accurately leap on unsuspecting prey from a distance of several body lengths.

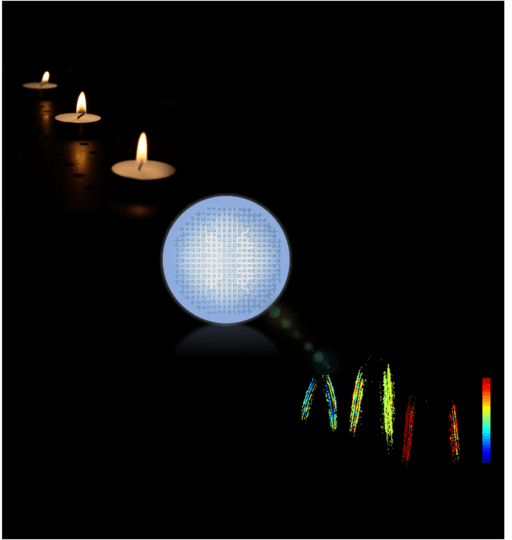

Spider-inspired metalens—The flat metalens (shown in the middle) captures images of a 3D scene, for example, candle flames placed at different locations (left), and produces a depth map (right) using an efficient computer vision algorithm which is inspired by the eye of jumping spiders. The color on the depth map represents object distance. The closer and farther objects are colored red and blue, respectively. (Image credit: Qi Guo and Zhujun Shi/Harvard University)

Spider-inspired metalens—The flat metalens (shown in the middle) captures images of a 3D scene, for example, candle flames placed at different locations (left), and produces a depth map (right) using an efficient computer vision algorithm which is inspired by the eye of jumping spiders. The color on the depth map represents object distance. The closer and farther objects are colored red and blue, respectively. (Image credit: Qi Guo and Zhujun Shi/Harvard University)

Drawing inspiration from these spiders, scientists at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have created a compact and efficient depth sensor that could be employed on board microrobots, in lightweight virtual and augmented reality headsets, or in small wearable devices. The device integrates a multifunctional, flat metalens with an ultra-efficient algorithm to compute depth in one shot.

Evolution has produced a wide variety of optical configurations and vision systems that are tailored to different purposes. Optical design and nanotechnology are finally allowing us to explore artificial depth sensors and other vision systems that are similarly diverse and effective.

Zhujun Shi, Study Co-First Author and PhD candidate, Department of Physics, Harvard University

The study has been published in Proceedings of the National Academy of Sciences (PNAS).

The majority of existing depth sensors, such as those in cars, phones, and video game consoles, use several cameras and integrated light sources to calculate distance. Face ID on a smartphone, for instance, uses thousands of laser dots to plot the contours of the face. This works for large devices with space for fast computers and batteries, but may not hold good in the case of small devices with minimal power and computation, like microrobots or smartwatches.

It looks like evolution offers plenty of options.

Depth is measured by humans using stereo vision; that is, when a person looks at an object, each of the person’s eyes gathers a moderately different image. To further understand this, one must hold a finger straight in front of their face and alternately open and close each of their eyes, noticing how the finger moves. Human brain takes those two images, inspects them pixel by pixel, and based on how the pixels change, it measures the distance to the finger.

That matching calculation, where you take two images and perform a search for the parts that correspond, is computationally burdensome. Humans have a nice, big brain for those computations but spiders don’t.

Todd Zickler, the William and Ami Kuan Danoff Professor of Electrical Engineering and Computer Science, SEAS, Harvard University

Zickler was also a co-senior author of the study.

Jumping spiders have evolved in a more efficient way to compute depth. Each principal eye comprises a few semi-transparent retinae organized in layers, which measure several images with varying amounts of blur. For instance, if a jumping spider observes a fruit fly with one of its principal eyes, the fly will seem sharper in one retina’s image and blurrier in the other. This shift in blur encrypts information about the distance to the fly.

In computer vision, this form of distance calculation is called depth from defocus. However, thus far, simulating nature has necessitated large cameras with motorized internal parts that can record differently focused images over time. This restricts the sensor’s speed and practical applications.

This is exactly where the metalens has a role to play.

Federico Capasso, the Robert L. Wallace Professor of Applied Physics and Vinton Hayes Senior Research Fellow in Electrical Engineering at SEAS and co-senior author of the paper, and his lab have already exhibited metalenses that can concurrently create several images comprising different information. Based on that research, the researchers engineered a metalens with the ability to concurrently create two images with different blur.

“Instead of using layered retina to capture multiple simultaneous images, as jumping spiders do, the metalens splits the light and forms two differently-defocused images side-by-side on a photosensor,” said Shi, who is part of Capasso’s lab.

Then, an ultra-efficient algorithm, created by Zickler’s team, analyzes the two images and prepares a depth map to signify object distance.

“Being able to design metasurfaces and computational algorithms together is very exciting,” said Qi Guo, a PhD candidate in Zickler’s lab and the paper’s co-first author. “This is new way of creating computational sensors, and it opens the door to many possibilities.”

Metalenses are a game changing technology because of their ability to implement existing and new optical functions much more efficiently, faster and with much less bulk and complexity than existing lenses. Fusing breakthroughs in optical design and computational imaging has led us to this new depth camera that will open up a broad range of opportunities in science and technology.

Federico Capasso, Study Co-Senior Author and Robert L. Wallace Professor of Applied Physics, SEAS, Harvard University

This research paper was co-authored by Yao-Wei Huang, Emma Alexander, and Cheng-Wei Qiu, of the National University of Singapore. The study was supported by the Air Force Office of Scientific Research and the US National Science Foundation.