Robots, by definition, are machines with autonomy. Over the past several years, robots have gained significant momentum in a variety of industries, such as logistics and delivery, manufacturing, cleaning and disinfection, and daily services. While people are enjoying the enormous benefits of robots and autonomy, it is crucial to understand how these autonomous functions (e.g., autonomous navigation, object detection, force measurement, collision detection, etc.) are achieved.

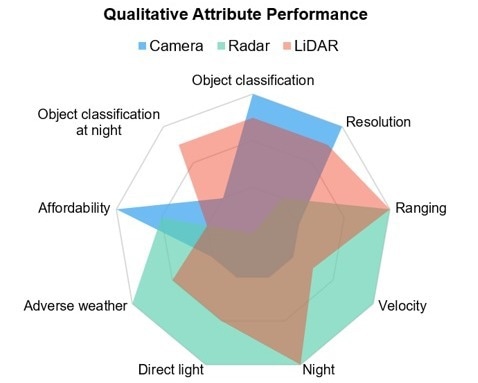

A qualitative analysis of different sensors’ performances. Image Credit: IDTechEx - “Sensors for Robotics 2023-2043: Technologies, Markets, and Forecasts”

A qualitative analysis of different sensors’ performances. Image Credit: IDTechEx - “Sensors for Robotics 2023-2043: Technologies, Markets, and Forecasts”

The answer is sensors. Sensors enable robots to perceive their ambient environments (e.g., detecting objects/collision, measuring the force, etc.) and measure their internal parameters (e.g., angular velocity, temperature, etc.), thereby taking corresponding reactions when necessary. With the rapidly growing market size of robots and accelerating demand for adopting autonomy in multiple industries, sensors for robots are expected to enter their prime time very soon. IDTechEx believes that the annual revenue for sensors in the robotics industry will exceed US$80 billion by 2043. More details on this estimation can be found in IDTechEx’s latest research – “Sensors for Robotics 2023-2043: Technologies, Markets, and Forecasts”.

As one of the most important components in robots, sensors can be categorized into proprioceptive sensors and exteroceptive sensors. Proprioceptive sensors measure internal data such as joint speed, torque, position, and force, whereas exteroceptive sensors collect parameters of surrounding environments such as light intensity, chemicals, distance to an object, and many others. In reality, multiple sensors are used together to deal with complicated tasks, and sensor fusion algorithms are used to merge data with various modalities (e.g. sound, vision, haptics, force, etc.) to provide a robust output. The table below outlines typical types of sensors and their applications.

The Increasing Demand for Autonomous Mobility Accelerates Navigation and Safety Sensors Adoption

Autonomous mobility is one of the most important parts of robot autonomy. With the increasing demand for contactless and robotic delivery and logistics, self-driving weeding robots and cleaning robots, and many others, IDTechEx concludes that autonomous mobility will have unprecedented growth than ever. Autonomous mobility requires robots to have a suite of functions, including autonomous navigation and mapping, object detection, proximity detection, posture control, and collision detection. Although it is fair to say that all these functions are equally important, navigation and safety usually come as priorities. Typical sensors for navigation and safety include cameras, Radar, and LiDAR. Each sensor has its benefits and drawbacks. For instance, cameras have the highest affordability and resolution but poor performance in a low-visibility environment (e.g., at night). By contrast, Radar systems are immune to low light conditions and are able to accurately measure range and velocity, although they are more expensive than cameras. LiDAR presents the best performance for object classification in bad weather/at night but is significantly more expensive than Radar and cameras. The chart below compares the pros and cons of these sensors. Therefore, the ultimate choice of sensors is subject to the robot’s working environment. For instance, indoor mobile robots (e.g., autonomous mobile robots (AMRs) and automated-guided robots (AGVs)) might not need to deal with bad weather or low visibility conditions as they work in a well-controlled environment. By contrast, outdoor robots like autonomous weeding robots would need high robustness to limited visibility and poor weather.

Safe Human-Robot Interaction (HRI) Drives the Adoption of Safety Sensors

Regardless of the robot type and working environment, safety always comes as the overarching priority when it comes to HRI. Safety, in the context of HRI, primarily refers to collisions. Having said this, collision detection, proximity detection, object detection/avoidance, and force/torque control are considered critical functions. Commonly used sensors include vision sensors, ultrasonic sensors, infrared sensors, force and torque sensors, tactile sensors, and many others. Similar to navigation sensors, safety sensors in robots also depend on specific working scenarios. For example, force and torque measurement sensors are usually used in collaborative robots (cobots) for both force measurement and collision detection. Infrared sensors (photoelectric curtains) and LiDAR are also used for proximity detection in HRI. IDTechEx’s recent research on cobots shows that the market size of cobots is expected to exceed US$80 billion within two decades. This rapid growth will lead to a fast-growing market of sensors in the cobotics and robotics industry. More details on the sensor market size by different robot types and different sensor types can be found in IDTechEx’s latest research, “Sensors for Robotics 2023-2043: Technologies, Markets, and Forecasts”.