In a recent article published in the journal Sensors, researchers introduced a real-time sign language recognition (SLR) system that uses wearable sensors to translate sign language into text or speech, enhancing communication for individuals with hearing impairments. This system addresses the increasing demand for effective communication tools, given the substantial global population affected by hearing loss.

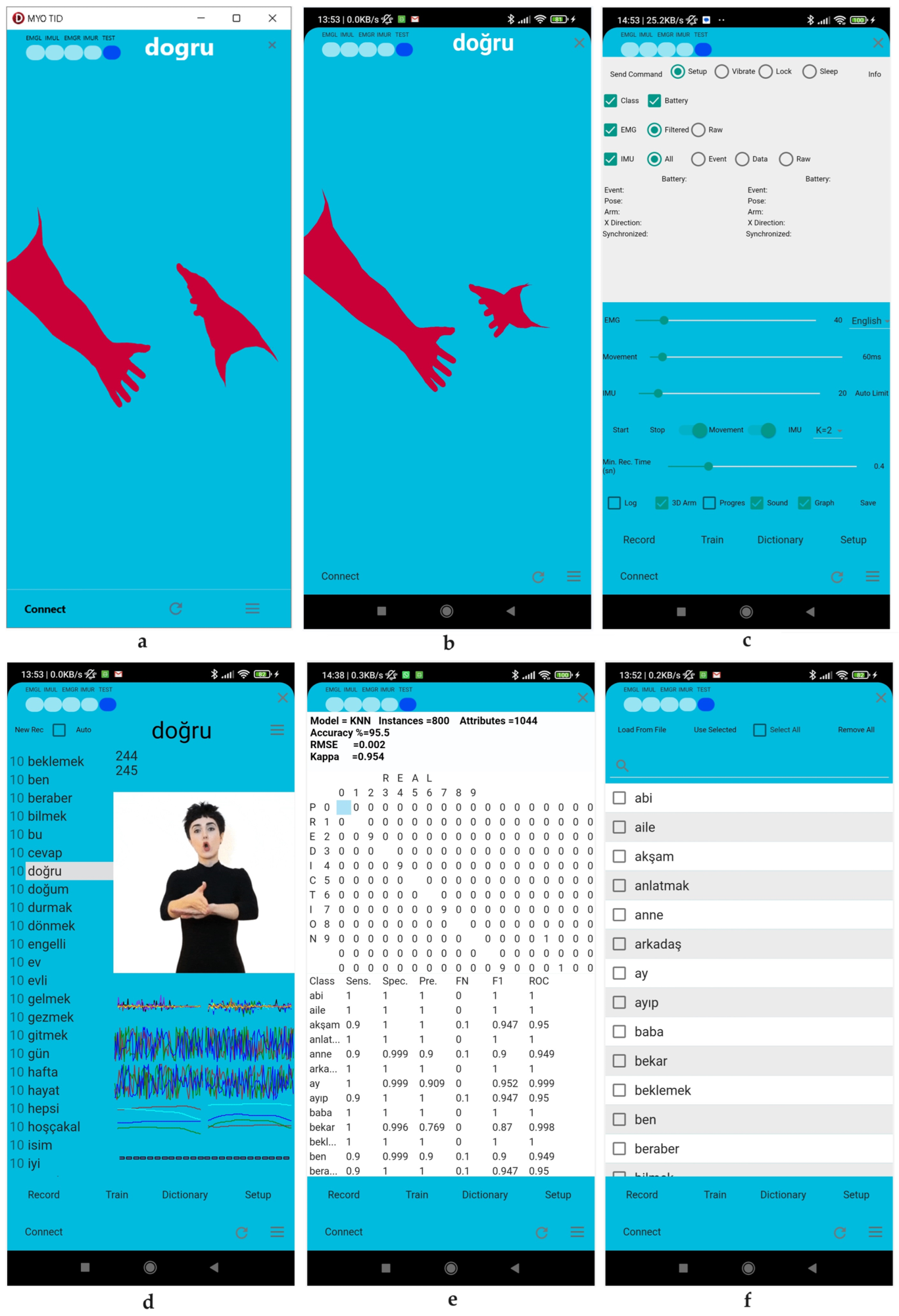

An example of the graphical user interfaces: (a) Windows main user interface; (b) Android main user interface; (c) settings user interface; (d) records user interface; (e) train user interface; (f) dictionary user interface. Image Credit: https://www.mdpi.com/1424-8220/24/14/4613

An example of the graphical user interfaces: (a) Windows main user interface; (b) Android main user interface; (c) settings user interface; (d) records user interface; (e) train user interface; (f) dictionary user interface. Image Credit: https://www.mdpi.com/1424-8220/24/14/4613

Sign language involves diverse modes of communication, including hand gestures, facial expressions, and body language. While traditional communication methods like writing and speechreading have their advantages, they often fall short in real-time interactions. The study in question has proposed a novel approach to communication that utilizes wearable sensors to improve the recognition and interpretation of sign language gestures, offering a more effective solution for real-time communication.

The Current Study

The data collection for this study involved two primary sensor types: surface electromyography (sEMG) sensors and inertial measurement units (IMUs).

-

sEMG Sensors: Placed on participants' forearm muscles, these sensors captured electrical signals generated during muscle contractions. This data provided insights into muscle activity associated with specific sign language gestures.

-

IMUs: Attached to the participants' arms, these units included accelerometers and gyroscopes that measured linear acceleration and angular velocity, respectively. The IMUs tracked arm orientation and movement, which are essential for recognizing the spatial aspects of sign language gestures.

Participants performed a predefined set of sign language gestures, chosen based on their frequency in daily communication. The dataset comprised 80 distinct signs, with each gesture performed multiple times by each participant to ensure robustness. Data was recorded in a controlled environment to minimize external noise and interference.

The raw data collected from the sEMG and IMU sensors underwent preprocessing. The sEMG signals were filtered using a bandpass filter to remove noise and artifacts, isolating the relevant frequency components related to muscle activity. The signals were then normalized to account for variations in muscle activation across participants, ensuring comparability and reducing inter-subject variability.

Key features were extracted from the preprocessed signals to facilitate gesture classification:

- sEMG Features: Mean, root mean square (RMS), and waveform length.

- IMU Features: Acceleration magnitude, angular velocity, and orientation angles.

Several machine learning algorithms were employed to classify the extracted features and recognize the sign language gestures, including K-Nearest Neighbors (KNN), Multilayer Perceptron (MLP), and Support Vector Machines (SVM). The performance of the SLR system was evaluated using accuracy, precision, recall, and F1-score, providing a comprehensive assessment of the model's effectiveness in recognizing sign language gestures. Results were compared across algorithms to identify the most effective approach for real-time sign language recognition.

Results and Discussion

The classification accuracy of each algorithm was evaluated using a 10-fold cross-validation approach. The results revealed the following:

-

Support Vector Machines (SVM): Achieved the highest accuracy at 99.875 %. This exceptional performance is attributed to SVM’s proficiency in handling high-dimensional data and its robustness against overfitting, making it highly effective for complex classification tasks.

-

K-Nearest Neighbors (KNN): Demonstrated a slightly lower accuracy of 95.320 %. This reduction can be linked to KNN’s sensitivity to the choice of the parameter K and variations in the training data distribution.

-

Multilayer Perceptron (MLP): Achieved an accuracy of 96.450%, which, while commendable, was lower than that of SVM. This reflects the inherent challenges of training deep learning models, especially when the dataset size is limited.

In addition to classification accuracy, the system’s real-time processing capability was assessed. The average feature extraction and classification time per gesture was 21.2 milliseconds. This rapid processing is crucial for effective communication, allowing for near-instantaneous interaction between users. The minimal delay experienced by participants during testing underscores the system’s suitability for practical, everyday use.

Overall, these results highlight the SVM algorithm as the most effective for sign language gesture recognition in this study, while also demonstrating the system's capability to facilitate real-time communication efficiently.

Conclusion

In conclusion, this study represents a significant advancement in sign language recognition technology, offering the potential to greatly enhance communication for individuals with hearing impairments. The researchers demonstrated that their system effectively translates sign language into text or speech in real-time, addressing a critical need within the disability community.

The authors emphasized the importance of further research to validate the system's effectiveness across diverse user groups and various sign languages. They also expressed a commitment to developing an improved device that exceeds the capabilities of current technologies, with the goal of making assistive tools more accessible to those with hearing impairments.

The findings of this study highlight the ongoing need for innovation in this field to foster inclusivity and improve the quality of life for people with hearing loss. The researchers' dedication to advancing this technology underscores the potential for future developments to bring significant benefits to the disability community.

Journal Reference

Umut İ, Kumdereli ÜC. (2024). Novel Wearable System to Recognize Sign Language in Real Time. Sensors 24(14):4613. DOI: 10.3390/s24144613, https://www.mdpi.com/1424-8220/24/14/4613