Jun 1 2017

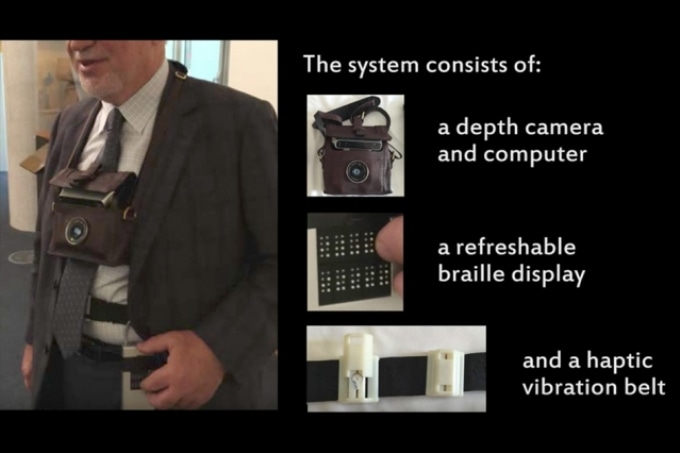

New algorithms power a prototype system for helping visually impaired users avoid obstacles and identify objects. Courtesy of the researchers

New algorithms power a prototype system for helping visually impaired users avoid obstacles and identify objects. Courtesy of the researchers

For decades, computer scientists have been working on automatic navigation systems to help the visually impaired. However, it has been difficult to come up with something easy and reliable to use as the white cane, which refers to a particular variety of metal-tipped cane that visually impaired people mostly use to identify clear walking paths.

However, white canes have a few disadvantages. One drawback is that the obstacles they come in contact with are at times other people. They fail to identify particular types of objects, such as chairs or tables, or determine whether a chair has been already occupied.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have created a new system that uses, a belt with individually controllable vibrational motors distributed around it, a 3D camera, and an electronically reconfigurable Braille interface to provide visually impaired users more details about their environments.

It is possible to use the system as an alternative to or in conjunction with a cane. In a paper to be shortly presented at the International Conference on Robotics and Automation, the researchers present a description of the system together with a series of usability studies they performed with visually impaired volunteers.

We did a couple of different tests with blind users. Having something that didn’t infringe on their other senses was important. So we didn't want to have audio; we didn’t want to have something around the head, vibrations on the neck — all of those things, we tried them out, but none of them were accepted. We found that the one area of the body that is the least used for other senses is around your abdomen.

Robert Katzschmann, Graduate Student, Mechanical Engineering, MIT

Katzschmann is joined on the paper by his advisor Daniela Rus, an Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science; his fellow first author Hsueh-Cheng Wang, who was a postdoc at MIT when the work was completed and is presently an assistant professor of electrical and computer engineering at National Chiao Tung University in Taiwan; Santani Teng, a postdoc in CSAIL; Brandon Araki, a graduate student in mechanical engineering; and Laura Giarré, a professor of electrical engineering at the University of Modena and Reggio Emilia in Italy.

Parsing the world

The researchers’ system is made up of a 3D camera worn in a pouch hung around the neck; the sensor belt, which has five vibrating motors spaced evenly around its forward half; a processing unit that runs the team’s proprietary algorithms; and the reconfigurable Braille interface, which is worn at the user’s side.

The key to the system is actually an algorithm for rapidly identifying surfaces and their orientations from the 3D-camera data. The researchers carried out experiments with three different types of 3D cameras, which employed three different techniques to gauge depth, but relatively low-resolution images — 640 pixels by 480 pixels—were produced by all with both depth and color measurements for each pixel.

First, the algorithm groups the pixels into clusters of three. Each cluster determines a plane as the pixels have associated location data. The system concludes that it has found a surface, if the orientations of the planes defined by five nearby clusters are within 10 degrees of each other. There is no need for it to determine the extent of the surface or what type of object it is the surface of; it just registers an obstacle at that location and then starts to buzz the associated motor if the wearer gets within 2 meters of it.

Chair identification is similar though a little more stringent. The system has to complete three diverse surface identifications, in the same general area, instead of just one, ensuring that the chair is unoccupied. The surfaces will have to be roughly parallel to the ground, and they will also have to fall within a set range of heights.

Tactile data

The belt motors can vary the intensity, frequency, and duration of their vibrations, and also the intervals between them, in order to send varied types of tactile signals to the user. For example, , an increase in intensity and frequency usually indicates that the wearer is nearing an obstacle in the direction indicated by that specific motor. However, when the system is in chair-finding mode, for instance, double pulse indicates the direction in which a chair that is not occupied can be found.

The Braille interface is made up of rows of five reconfigurable Braille pads. The objects in the user’s environment are described by symbols displayed on the pads, for instance, a “c” for chair or a “t” for table. The position of the symbol in the row indicates the direction in which it can be found; the distance is indicated by the column it appears in. A skilled Braille user should be able to find that the signals from the Braille interface and the belt-mounted motors correspond.

In tests that were performed, the chair-finding system reduced by 80% the subjects’ contacts with objects other than the chairs they sought. The number of cane collisions with people loitering around a hallway was reduced by 86% by the navigation system.

Navigation for Visually Impaired People