Jun 28 2014

Remember those molecule models made from sticks and balls you could assemble to study complex molecules back in school? Something similar has taken shape in the Interactive Geometry Lab at ETH Zurich.

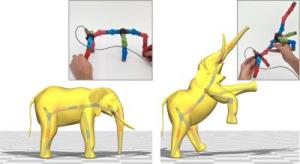

The versatile joystick developed by ETH Zurich researchers allows articulating animated characters. Credit: Interactive Geometry Lab / ETH Zurich

The versatile joystick developed by ETH Zurich researchers allows articulating animated characters. Credit: Interactive Geometry Lab / ETH Zurich

ETH-professor Olga Sorkine-Hornung and her team do not study molecules but ways to manipulate virtual shapes, like animated characters on a computer screen. Now they have developed an input device or "joystick" to move and pose virtual characters, made up – similar to the molecule models – of modular building blocks. An artist can assemble these blocks into an approximate representation of any virtual character, be it a human, a dog or an elephant or even just single body parts like arms or a hand.

Modular principle

In collaboration with the Autonomous Systems Lab lead by ETH-Professor Roland Siegwart, Sorkine-Hornung's team developed a modular "input-puppet" with integrated sensors that can take any shape: A set of 3D-printed modular building blocks can be assembled into an approximation of any virtual character. Sensors in each joint measure the bending angle or the degree of a twisting motion, and transfer this information to a software that computes how the virtual characters should move.

«The software assists the artist in registering their newly assembled device to the character's shape», explains Sorkine-Hornung. Thus, the artist can match each actual joint of the input device to the corresponding virtual joint of the animated character. This way, even if the input-puppet features a rather short neck, it can be fitted to an animated giraffe's long neck.

Blueprints for further research

The researchers have made the blueprints for their device's building blocks freely available as Open Hardware, hoping to foster further research. «Anyone can 3D-print the separate units and with the help of an engineer integrate the electronics», explains Sorkine-Hornung. Also, a set of 25 ready-made building blocks might be made available commercially at some point. «We are going to present the device at the SIGGRAPH conference and exhibition on Computer Graphics and Interactive Techniques this coming August. So we hope to receive some feedback whether there is a demand for a commercially available set and for further improvements of the device's design", says Sorkine-Hornung. The current design only allows bending and twisting in two separate movements. One possible improvement the researchers plan to look into is ball-and-socket joints, similar to the human shoulder joint, allowing easier manipulation of the puppet.

Animation artists usually go through years of training in order to learn how to manipulate virtual characters. Every movement is made up of key frames: snapshots of the movement from which a software can interpolate the whole smooth motion. To get a virtual character to move realistically, the artist has to define the key frames, but dragging each virtual limb into the required pose with a computer mouse is tedious and time-consuming. Thus, researchers are working on alternative input devices, like puppets that artists can manipulate directly on their desktop. Some approaches use motion-capture of the puppet with several cameras. Yet, the artist's hands obscure parts of the puppet while handling it, making real-time manipulation difficult. Others avoid this problem by integrating sensors into the puppet's joints. However, these puppets have usually one pre-defined shape, for instance that of a human which is not suitable for manipulating an animated dog.